Within the ever-changing field of data management, Microsoft Excel is a vital tool for data organization and analysis. The constant problem of duplicate entries lurks, potentially jeopardizing the integrity of your data and producing biased analyses, regardless of the size of your spreadsheet or dataset. With the help of this thorough tutorial, users will be able to identify and eliminate duplicates in Excel, maintaining accurate and dependable datasets.

Understanding the Impact of Duplicate Data in Excel

In the data-driven era, the accuracy of information is paramount for effective decision-making. Duplicate entries in Excel can wreak havoc on your data, introducing errors and distortions that may go unnoticed until it's too late. Consider a sales dataset where duplicate transactions can artificially inflate revenue figures, leading to misguided business strategies. The impact of duplicates extends across various industries, emphasizing the need for robust duplicate detection and removal strategies. Duplicate data can result from various sources, including data entry errors, system glitches, or intentional data manipulation. Inaccurate reporting due to duplicates can lead to poor business decisions, financial losses, and a lack of trust in the data itself. Implementing robust data validation protocols and utilizing advanced deduplication algorithms can significantly mitigate these risks. This not only ensures accurate reporting but also enhances the efficiency of automated workflows, fostering a more reliable and streamlined data management ecosystem.How to Identify Duplicate Data in Excel

Before delving into the intricacies of duplicate removal, it's crucial to master the art of identification. Excel provides users with several built-in tools to identify duplicates, from simple techniques like conditional formatting to more complex functions like COUNTIF, IF, and VLOOKUP. Understanding which columns to target and how to effectively use these tools is the first step toward ensuring data accuracy.Conditional Formatting for Visual Identification

Conditional formatting is a user-friendly feature in Excel that allows you to visually highlight duplicate values. By selecting the desired range and applying conditional formatting rules, duplicate values can be instantly identified through color differentiation or other formatting options. This provides a quick overview of potential duplicates within your dataset, enabling you to focus on specific areas that require further investigation.- Using COUNTIF for Precise Duplicate Counts: For a more quantitative approach, Excel's COUNTIF function is invaluable. It enables you to count the occurrences of a specific value within a range, helping you pinpoint the duplicates in your dataset. By applying COUNTIF to relevant columns, you can identify entries that occur more than once, offering a precise count of duplicate occurrences.

- Dynamic Duplicate Detection with IF and VLOOKUP: The IF function in Excel allows for conditional logic, enabling users to create custom rules for duplicate identification. By combining the IF function with other Excel functions or logical operators, you can design intricate criteria for identifying duplicates based on specific conditions. For example, you might want to flag duplicates only if they meet certain criteria, such as having the same values in multiple columns or meeting a certain numerical threshold. VLOOKUP, on the other hand, is particularly useful when you're comparing data across different columns or sheets. This function allows you to search for a value in a specified range and retrieve data from a corresponding column. By utilizing VLOOKUP in conjunction with other functions, you can establish intricate relationships between datasets, facilitating more nuanced duplicate detection.

- Excel's Built-in Functions for Duplicate Detectiona: Excel's repertoire of functions empowers users to identify duplicate values with precision. The COUNTIF function, for instance, allows users to count occurrences of a specific value, aiding in the detection of duplicates. When combined with the IF and VLOOKUP functions, users gain the ability to create dynamic and customizable duplicate detection mechanisms. Highlighting and filtering duplicate values through conditional formatting further enhances the visual identification process, providing a comprehensive toolkit for users at all levels.

- Highlighting and Filtering Duplicate Value: Conditional formatting is not just limited to visual identification; it can also be used to filter duplicate values. By applying a conditional formatting rule that highlights duplicates and then using the filter functionality, you can quickly isolate and analyze duplicate entries. This step is crucial in the preliminary stages of data cleaning, helping you understand the extent of duplication in your dataset.

Advanced Filter Option for Customized Analysis

For users seeking more customization in their duplicate identification process, Excel's Advanced Filter option provides a versatile solution. This feature allows you to set complex criteria for filtering data, making it an ideal choice for scenarios where standard tools may fall short. To use the Advanced Filter, you'll need to create a criteria range that defines the conditions for identifying duplicates. This criteria range can include multiple columns and intricate logical statements, providing a high level of flexibility. Once the criteria are established, the Advanced Filter will generate a filtered list based on the specified conditions, allowing you to focus solely on the duplicates that meet your criteria. This level of customization is particularly beneficial when dealing with datasets with specific requirements for duplicate identification. Whether you're working with intricate data relationships or need to filter duplicates based on a combination of attributes, the Advanced Filter option empowers you to tailor the analysis to your unique needs.Step-by-Step Guide to Removing Duplicates in Excel

- Backup Your Data: Before initiating the removal process, it's prudent to create a backup of your dataset. This precautionary step ensures that you have a copy of the original data in case any unforeseen issues arise during the removal process.

- Select the Data Range: Begin by selecting the range of data from which you want to remove duplicates. This can be done by clicking and dragging your cursor to highlight the relevant cells.

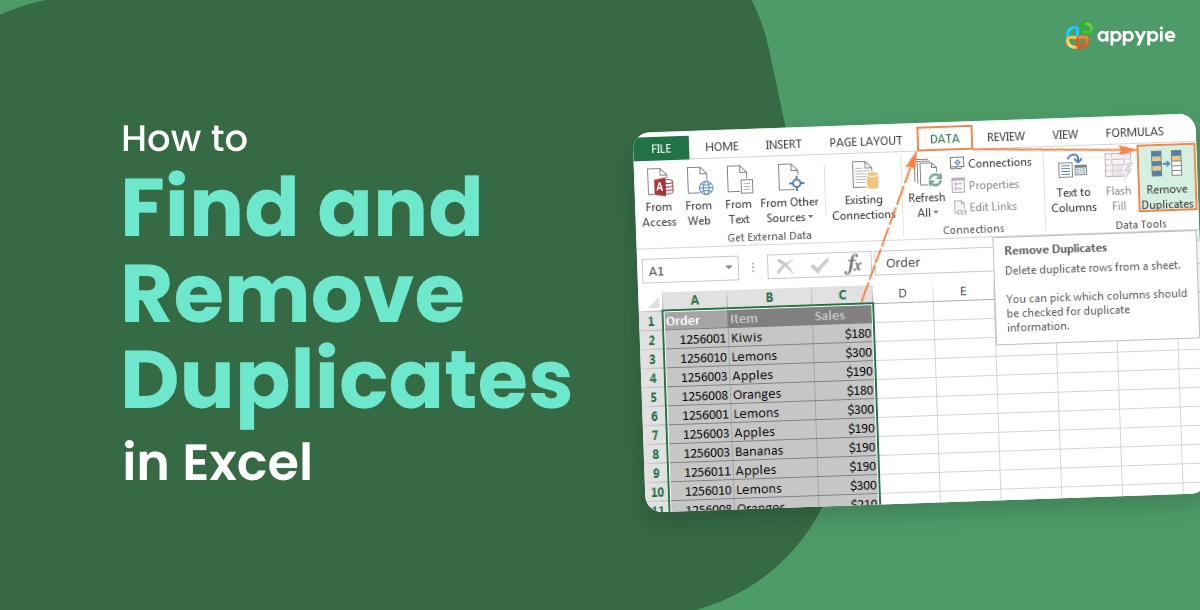

- Access the Remove Duplicates Feature: Navigate to the "Data" tab on the Excel ribbon and locate the "Remove Duplicates" button. Clicking on this button opens the Remove Duplicates dialog box.

- Choose Columns for Analysis: In the dialog box, Excel displays a list of all columns in your selected range. You have the flexibility to choose the specific columns that should be considered when identifying duplicates. Carefully select the columns based on the nature of your data.

- Define Criteria for Duplicates: Excel allows you to define criteria for identifying duplicates. Depending on your dataset, you may want to consider all columns or focus on specific attributes. The options in the dialog box provide a high degree of customization.

- Review and Confirm: Before finalizing the removal process, take a moment to review your selections in the Remove Duplicates dialog box. Ensure that the chosen columns and criteria align with your data-cleaning goals.

- Execute the Removal: Once you are satisfied with your selections, click the "OK" button to initiate the removal process. Excel will swiftly analyze the selected columns based on your defined criteria and remove duplicate entries accordingly.

- Review the Summary: After completing the removal, Excel provides a summary of the number of duplicates found and removed. This summary offers transparency into the changes made to your dataset.

- Examine the Cleaned Dataset: Take the time to review the cleaned dataset and verify that the removal process aligns with your expectations. This step ensures that removing duplicates has not inadvertently affected other aspects of your data.

Best Practices for Data Maintenance

As a parting note, this guide offers essential best practices for ongoing data maintenance. From regular checks for duplicates to implementing data validation rules, these practices ensure that your Excel sheets remain accurate and reliable over time. Consistent data hygiene practices contribute to the longevity of your datasets and form the foundation for sound decision-making.- Regular Audits for Duplicates: Performing regular audits for duplicates should be a cornerstone of your data maintenance routine. Establish a schedule for duplicate checks, especially if your dataset undergoes frequent updates. This proactive approach helps identify and address duplicates before accumulate and potentially impact your analyses.

- Data Validation Rules: Implementing data validation rules adds an extra layer of protection against duplicate entries. Excel allows users to set validation criteria for specific columns, restricting the entry of duplicate values. By defining rules such as unique values or custom formulas, you create a preemptive barrier that minimizes the likelihood of duplicates entering your dataset.

- Utilize Data Quality Tools: Explore third-party data quality tools that complement Excel's native features. These tools often provide advanced functionalities, such as fuzzy matching algorithms for handling variations in data entry. Integrating such tools into your data maintenance workflow enhances the accuracy and efficiency of duplicate detection and removal processes.

- Educate Users on Data Entry Best Practices: Empower individuals responsible for data entry with knowledge of best practices. Providing training on proper data entry techniques, such as avoiding copy-pasting errors and double-checking entries, contributes to the prevention of duplicate issues at the source.

- Version Control and Backups: Establish a robust version control system to track changes to your datasets over time. Regularly create backups before initiating significant data operations, ensuring that you have a safety net in case unexpected issues arise during duplicate removal or other processes.

- Document Data Cleaning Procedures: Documenting your data cleaning procedures is essential for knowledge transfer and internal auditing. Create a comprehensive guide that outlines the steps taken during duplicate identification and removal. This documentation serves as a reference for future users and facilitates a standardized approach to data maintenance within your organization.

- Collaborate with IT and Database Administrators: For organizations managing large and complex datasets, collaboration with IT and database administrators is vital. These professionals can provide insights into database structures, optimize queries for duplicate identification, and offer guidance on maintaining data integrity within the broader organizational framework.

Conclusion

Mastering the art of finding and removing duplicates in Excel is a fundamental skill for anyone working with data. As datasets grow in complexity and size, the ability to navigate and cleanse data becomes increasingly crucial. With the insights and techniques shared in this guide, users can embark on a journey towards maintaining pristine and reliable datasets in Microsoft Excel, unlocking the true potential of their data-driven endeavors.Related Articles

- 15 Inspiring Website Color Schemes for Your Next Project

- What are HubSpot Integrations: A Complete Guide

- How To Make A Sticker In 2024: A Step-By-Step Guide

- 5 Best Webex Meetings Integrations

- Top 20 Movie Download Websites of 2023 for Film Enthusiasts

- What’s a BPO call center and what does it do?

- Discord Text Formatting Guide: Bold, Color, Italic, Strikethrough

- Why should you try a Black and White Brand Logo Identity?

- 15 Ways To Attract More Customers To Your Travel Agency

- 200+ Business Names and Startup Strategies for Aspiring Entrepreneurs

Most Popular Posts

- Starting Your Etsy Shop: A Comprehensive Guide to Launching a Successful Etsy Business

- Top Procore Integrations: Streamline Construction Management

- 11 Best Tips to Design with a Blurred Background with Case Studies

- Quick Tricks to Create Graphic Design Color Schemes

- Why Khaki Never Goes Out of Style: A Look into its Timeless Appeal