ID-Animator: Zero-Shot Identity-Preserving Human Video Generation

- Published on April 24, 2024 11:36 am

- Editor: Yuvraj Singh

Generating high-fidelity human videos with specified identities has been a significant challenge in the content generation community. Existing techniques often struggle to strike a balance between training efficiency and identity preservation, either requiring tedious case-by-case fine-tuning or failing to accurately capture the identity details in the video generation process.

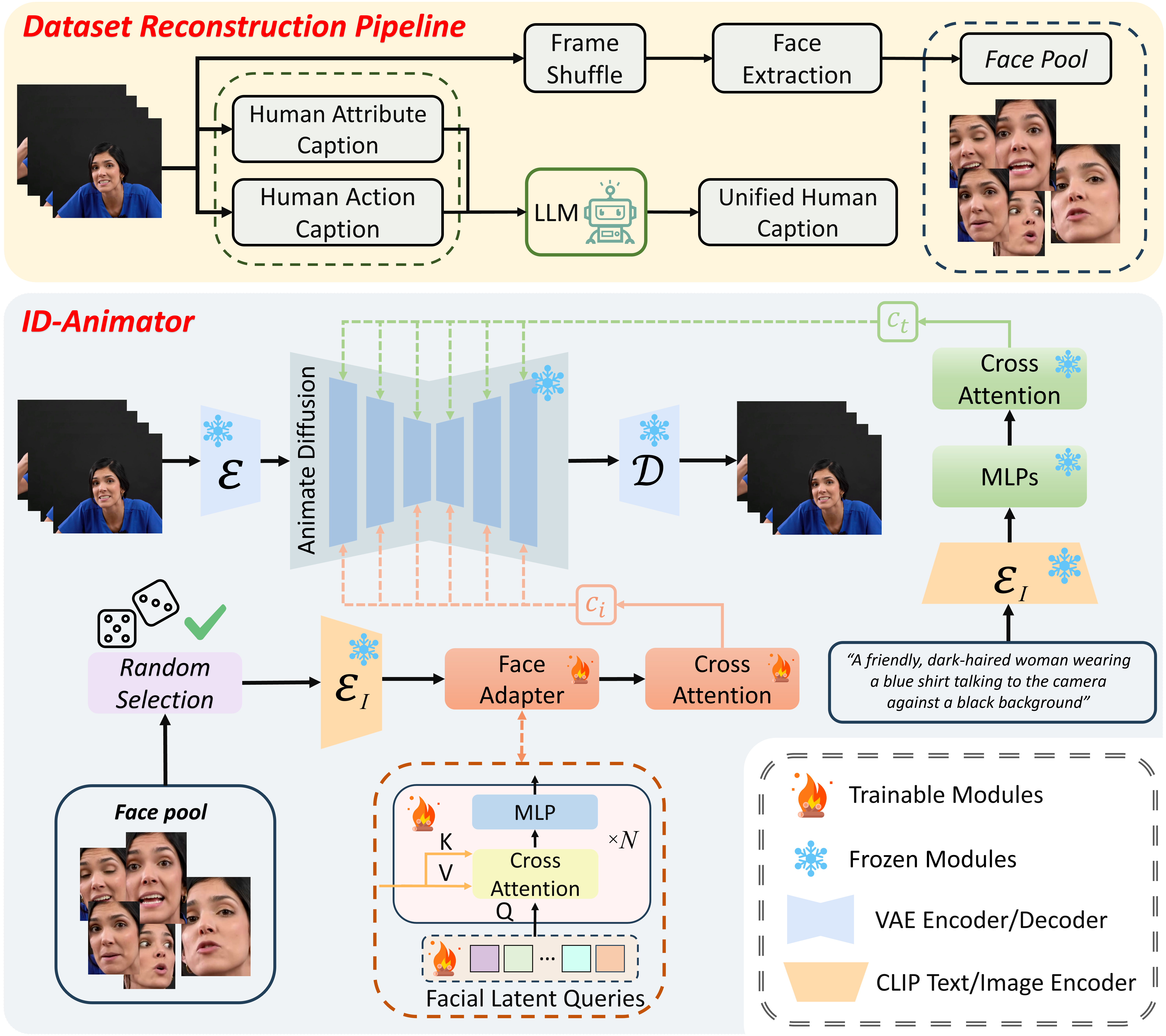

In this study, the researchers present ID-Animator, a zero-shot human video generation approach that can perform personalized video generation using a single reference facial image, without the need for further training. ID-Animator builds upon existing diffusion-based video generation backbones, incorporating a face adapter to encode the ID-relevant embeddings from learnable facial latent queries.

To facilitate the extraction of identity information during video generation, the researchers introduce an ID-oriented dataset construction pipeline. This pipeline incorporates a decoupled human attribute and action captioning technique, leveraging a constructed facial image pool. Additionally, a random face reference training method is devised to precisely capture the ID-relevant embeddings from reference images, thus improving the fidelity and generalization capacity of the model for ID-specific video generation.

Extensive experiments demonstrate the superiority of ID-Animator in generating personalized human videos over previous models. Moreover, the proposed method is highly compatible with popular pre-trained text-to-video (T2V) models like Animadiff and various community backbone models, showcasing high extensibility in real-world applications where identity preservation is highly desired during video generation.